In its latest push to protect young people online, Meta has announced a wave of new safety features and policy updates aimed at shielding teens – and children featured on Instagram – from unwanted messages, nudity, and predatory behaviour. The Meta Child Protection updates build on the platform’s existing Teen Account tools, while also expanding protections to adult-run accounts that feature children under 13.

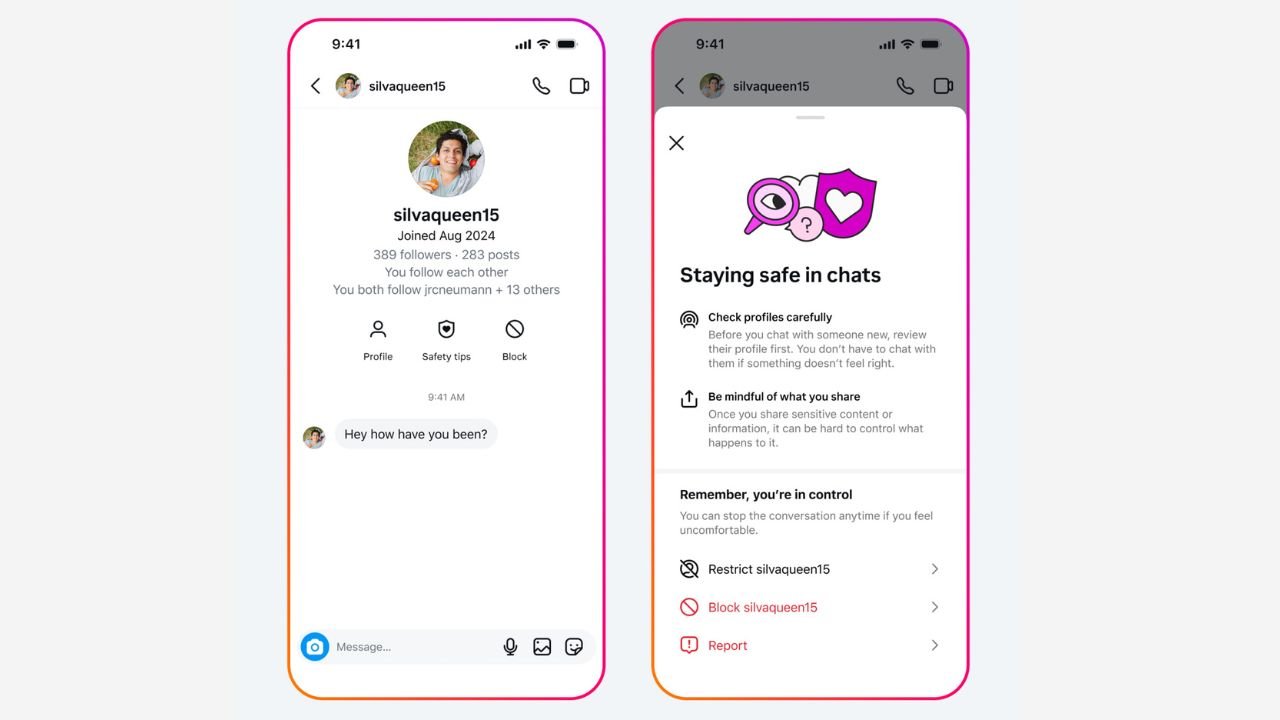

One of the most notable additions is a redesign of how teen users experience new direct messages (DMs). of the message thread. When a teen starts a new chat, Instagram now shows options to view safety tips, block the other user, and check when the account was created – all displayed prominently at the top of the message thread. These features help teens assess whether the person they’re chatting with is legitimate or potentially suspicious.

To make it even easier to take action against shady behaviour, Meta is also rolling out a new “Block and Report” button – letting users do both in one tap. This combined feature aims to encourage teens to not just cut off contact but also report bad actors so Instagram’s enforcement teams can step in.

And, these tools appear to be making a real impact. In June alone, teens blocked other accounts 1 million times and reported another 1 million users after seeing safety notices in DMs. Meta also revealed that its Location Notice – an alert that pops up when someone appears to be messaging from another country – was shown 1 million times last month. One in 10 teens who saw the notice clicked through to learn what steps they could take.

Nudity protection is another feature Meta is doubling down on. Since its global launch, 99% of users have kept it enabled – teens included. In June, over 40% of blurred images stayed blurred, significantly reducing unwanted exposure to nudity in private messages. The feature also seems to prompt hesitation: in May, nearly half of users who received a blurred image chose not to forward it after seeing Meta’s warning.

But, it’s not just teens who are getting extra support. In a significant policy shift, Meta is now extending Teen Account-style protections to adult-managed accounts that primarily feature children – think “mum-run” kids’ influencer pages or accounts for young talent.

These changes mean those accounts will automatically have Instagram’s strictest message settings applied, including Hidden Words filters that block offensive comments. Meta will also notify the adults running these accounts about the safety updates and encourage them to review privacy settings.

To limit the chances of harmful contact, Meta will stop recommending these accounts to adults who’ve shown suspicious behaviour—such as those previously blocked by teens—and vice versa. They’ll also be harder to find in Search, and their comment sections will be better protected from predatory users.

And Meta isn’t stopping there. Behind the scenes, the platform has taken some serious action against violators. Earlier this year, Meta removed nearly 135,000 Instagram accounts that sexualised child-focused content and took down another 500,000 connected accounts across Instagram and Facebook. Meta also notified targeted users about the removals and encouraged them to block and report similar behaviour.

Finally, Meta recognises that child exploitation spans multiple platforms and now shares data with other tech companies through the Tech Coalition’s Lantern program – an industry-wide effort to root out online predators across the web.

The updates send a clear message: Meta is taking its responsibility to protect young users seriously. While there’s always more work to do, especially in the ever-evolving world of social media safety, these new tools offer meaningful improvements for teens, children, and the families who manage their accounts.